LARNet: Lie Algebra Residual Network for Face Recognition (Xiaohong Jia and Xiaolong Yang)

The research work "LARNet: Lie Algebra Residual Network for Face Recognition" by associated professor Xiaohong Jia and her Ph.D. student Xiaolong Yang, collaborated with researchers from Tencent AI lab, was recently accepted for publication by the 38th International Conference on Machine Learning (ICML), 2021. The work embeds Lie algebra theory in convolutional neural networks (CNNs) for face recognition with large pose or profile face. Comprehensive experimental evaluations on many widely used datasets outperforms the state-of-the-art ones.

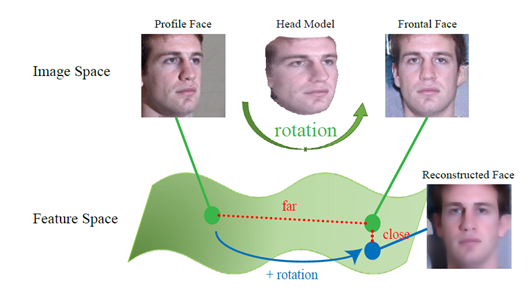

This work explores how face rotation in the 3D space affects the deep feature generation process of convolutional neural networks (CNNs) and proves that face rotation in the image space is equivalent to an additive residual component in the feature space of CNNs, which is determined solely by the rotation (Shown as Fig.1).

Figure 1: Face rotation in the image space and the deep feature space

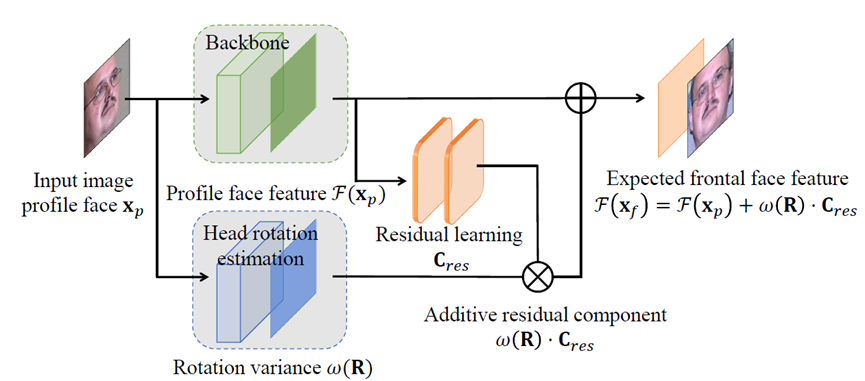

The recent development of deep learning models and an increasing variety of datasets have greatly advanced face recognition technologies. Since the generalization ability of the deep model is closely related to the size of the training data, given an uneven and insufficient distribution of frontal and profile face images, the deep features tend to focus on frontal faces, and a major challenge in practical face recognition applications lies in significant variations between profile and frontal faces. Existing methods often generate more profile faces from different angles based on the original image to expand the training set, but introduce lots of unnecessary computational burden and training cost. This work aims to recover the deep features of the excepted frontal face from the profile face image, to perform high-precision and high-efficiency face recognition. In particular, the Lie algebra theory is embedded in the gradient optimization process of CNN, which studies the process of face rotation affecting the deep feature generation in the deep feature space. In terms of network design, a residual training network is designed to learn the contribution of rotation in profile face images; a gating function is designed to trade-off the strength of rotation and deep features, and finally generate corresponding expected frontal features (See Figure 2).

Figure 2: The architecture of our LARNet.

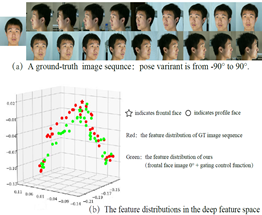

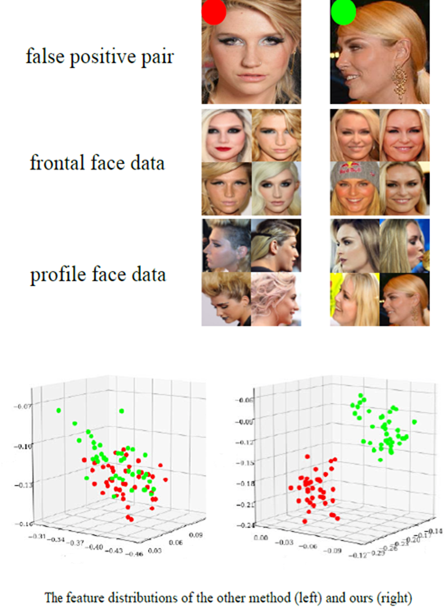

Figure 3 shows the feature representation ability - LARNet can simulate profile face features with any angles, and the feature distribution is close to the distribution of real inputs (figure on the left), and the feature clustering ability - LARNet can be significant distinguish between highly similar challenging cases (figure on the right).

This work has achieved leading results in the evaluations of various well-known datasets. For example, results under all four metrics of the profile face dataset IJB-A are better than ones of many face recognition methods in the recent five years; results on the celebrity dataset CFP-FP break 99% for the first time and surpass the reported human-level; results also outperform on general datasets: LFW, YTF, and CPLFW. Review comments of the paper include: “Significant contribution, advances state of the art”, “Technically strong, highly general results”, and “Technically adequate for its area, solid results”.

Reference:

Xiaolong Yang, Xiaohong Jia, Dihong Gong, Dong-Ming Yan, ZhiFeng Li, and Wei Liu

LARNet: Lie Algebra Residual Network for Face Recognition. Proceedings of the 38th International Conference on Machine Learning (ICML2021).